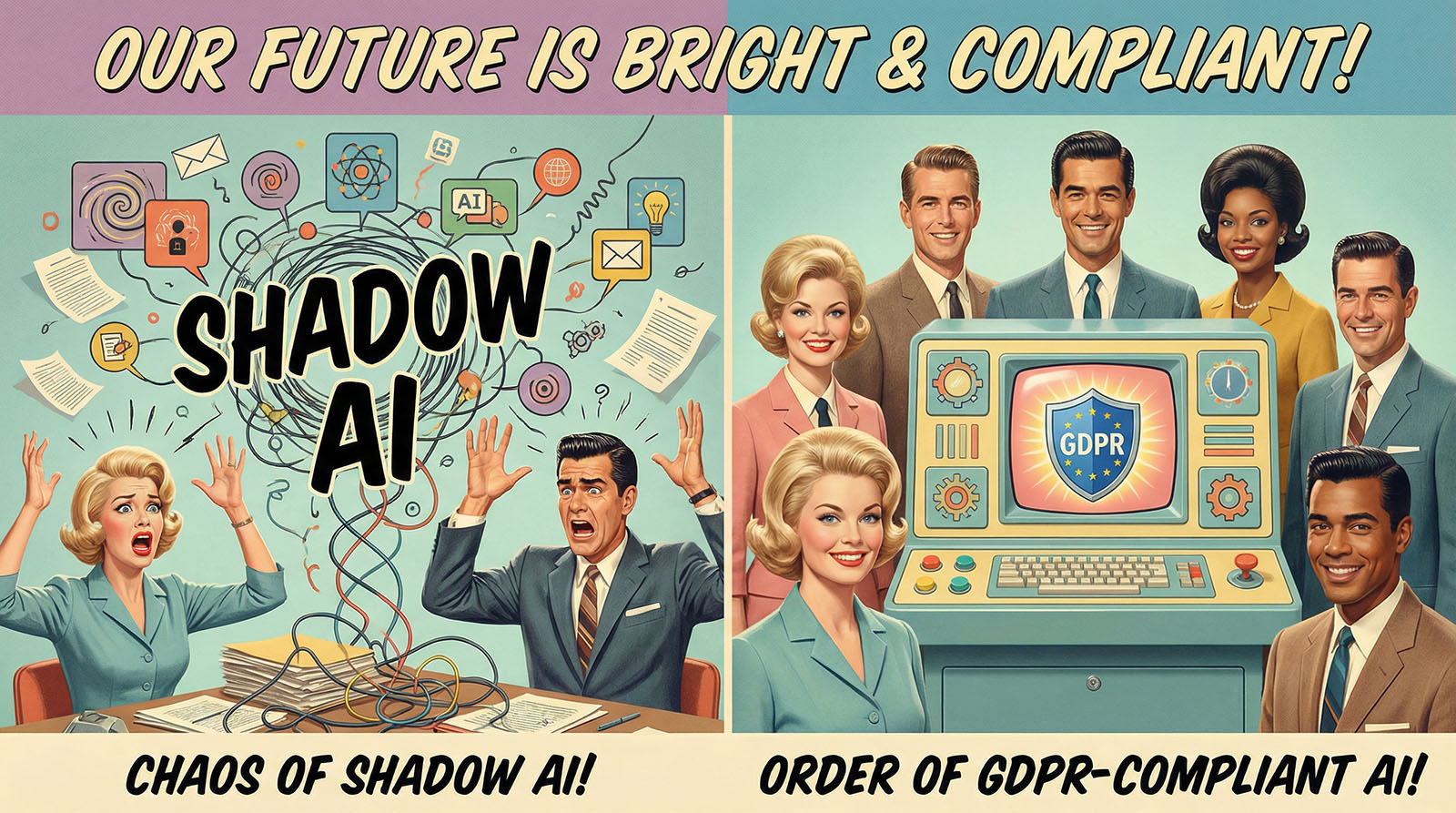

There is a high probability that someone in your company has been using AI for some time now—without any guidelines, approval, or AV contract.

In marketing, texts are polished with ChatGPT; in sales, customer data ends up in prompts; in development, code is cross-checked using AI tools. Well-intentioned, but from the perspective of GDPR, EU AI Regulation, and corporate compliance It's a ticking time bomb: shadow AI.

At the same time, it would be absurd to ignore the productivity gains of modern Large Language Models (LLMs) to refrain from doing so. The trick is to, AI and data protection bringing them together – with a clear framework that allows for innovation and limits risks.

As Certified AI expert (MMAI® Business School certificate, Academy4AI) and future member of the German Federal AI Association I support companies precisely at this intersection of technology, law, and governance—and as WooCommerce specialist & WordPress developer for SMEs and industry I am very familiar with the practical perspective from projects.

This article is about:

- such as GDPR and EU AI Regulation (AI Act) interact,

- which risks are truly relevant when using ChatGPT, Claude, Gemini, and similar platforms,

- which Practical rules for privacy-friendly AI use should introduce,

- and why a platform like InnoGPT is an exciting option if you want to give your teams a GDPR-compliant AI environment would like to provide.

In this article, I share my professional perspective as a certified AI expert. However, this article does not replace individual legal advice. If you require a binding assessment of data protection law, I recommend consulting a qualified lawyer or data protection officer.

1. The binding legal framework for 2025: GDPR + EU AI Regulation (AI Act)

1.1 GDPR remains the basis for all personal data

As soon as you personal data Feed data into AI systems—whether for training, responding to queries, or analysis—the GDPR applies. Among other things, you must:

- one legal basis under Article 6 of the GDPR,

- transparency guarantee to those affected,

- data minimization take note of,

- Implement technical and organizational measures (TOMs),

- and, if applicable,. Data protection impact assessments (DPIA) carry out. (Handelsblatt Live)

The German Data Protection Conference (DSK) has published a detailed Guidance on AI and data protection„ published. It makes it clear that whoever selects and uses AI applications is responsible for ensuring that this selection is made in compliance with data protection regulations—including the choice of provider, data flows, and configuration. (data protection conference)

The EDPB (European Data Protection Board) has, with his ChatGPT Task Force It also addresses specific questions regarding the legality of web scraping, transparency, and accuracy requirements for LLMs. (EDPB)

In short: Even though AI is new – In terms of data protection law, it is not a legal vacuum..

1.2 EU AI Regulation (AI Act): Risk-based & governance-driven

With the EU AI Regulation (Regulation (EU) 2024/1689) In 2024, the EU adopted the world's first comprehensive legal framework for AI systems. The AI Act has been in force since August 1, 2024 in force and establishes a risk-based approach: from minimal risk to limited risk to High-risk AI and prohibited practices. (EUR-Lex)

Important points:

- Some Prohibitions on certain AI practices (e.g., certain forms of manipulative systems) and requirements for AI literacy have been in effect since February 2, 2025. (Artificial Intelligence Law EU)

- The majority of duties—especially for High-risk AI – will be phased out by August 2, 2026 effective, with further specifications and guidelines from the EU Commission and the new European AI Office. (AI Act Service Desk)

- Among other things, the AI Act establishes requirements for Risk management, data quality, logging, technical documentation, transparency, human oversight, and governance structures. (EUR-Lex)

The EU is currently discussing, extend certain obligations for high-risk AI until 2027, to give companies more time to implement the changes. As of today (December 11, 2025), this is a political proposal that still has to go through the legislative process. (Reuters)

Important for you:

The GDPR remains fully applicable, The AI Act supplements them. In case of doubt, the following applies:

„AI Act regulates what what an AI system is permitted to do – the GDPR regulates this., how You are permitted to handle personal data.“ (Handelsblatt Live)

1.3 AI literacy and governance obligations: Why companies need to demonstrate AI competence since 2025

The EU AI Regulation provides a clear framework for the first time for the organizational responsibility of companies, that use AI systems—regardless of whether they develop their own models or use external tools.

Two points have been particularly important since 2025:

AI literacy requirement (Art. 4 AI Act) – applicable since February 2025

Companies that provide or use AI systems („providers“ and, above all, „deployers“) must ensure that their employees have a sufficient level of AI expertise own. In practice, this means:

- Employees must understand how AI works in principle, where the risks lie, and how to work with it safely.

- Companies must provide training, awareness measures, and internal guidelines.

- These measures must be documented in such a way that they can be verified within the framework of accountability.

In other words:

„Since February 2025, “using AI„ has been inextricably linked to “demonstrating AI competence.".

AI governance – relevant for general-purpose AI since August 2025

With the start of application of the regulations on General Purpose AI (GPAI) in August 2025, additional organizational requirements will apply—especially for providers, but indirectly also for companies that use such systems productively:

- structured documentation of the models used,

- Monitoring and logging of usage,

- Processes for incident management, risks, and complaints,

- Clear roles and responsibilities in the use of AI.

Even though the full set of obligations for high-risk AI will not take effect until 2026/2027, one thing is clear:

Without an AI governance concept—i.e., documented responsibilities, guidelines, processes, and training—it will become increasingly difficult for companies to credibly demonstrate AI Act and GDPR compliance.

2. What regulatory authorities specifically say about AI & LLMs

To make this more tangible, let's take a brief look at three key sources:

- DSK guidance document „AI and data protection“ (2024) – provides companies and public authorities with criteria for selecting and using AI systems: purpose limitation, legal basis, data minimization, transparency, contract processing, technical and organizational measures. (data protection conference)

- EDPB ChatGPT Task Force Report (May 2024) – highlights, among other things:

- how training involving web scraping of personal data should be assessed from a legal perspective,

- What transparency and information obligations exist towards users?,

- What requirements are placed on the accuracy and fairness of LLM responses? (EDPB)

- National information sheets, e.g., HWR Berlin / Data Protection Officer – show very specifically what data is generated when generative AI is used and how this use can be made privacy-friendly (e.g., no direct identifiers, pseudonymization, no sensitive data in freely available tools). (Data protection HWR Berlin)

The message is similar everywhere:

- Principle: Enter as little personal data as possible into AI systems.

- Companies need clear rules, which tools may be used and how.

- „Just trying it out quickly“ is not a legal basis.

3. Typical risks: How shadow AI arises in companies

Some typical situations that I see time and time again:

- Marketing loads customer data (e.g., CRM export) into any AI web app to „quickly form segments.“.

- HR has ChatGPT evaluate employment contracts or job applications—including complete personal data.

- Sales copies entire email histories containing personal information into LLMs in order to formulate „better answers.“.

- Departments use free LLM tools without a corporate account, without an AV contract, without knowing where data is processed.

From a data protection perspective, this raises several issues:

- unresolved Roles & Responsibilities (Controller / Processor),

- possible Third country transfers (e.g., USA),

- unclear Storage and training use of data,

- missing or insufficient Information for those affected.

It is precisely these cases that data protection supervisory authorities have been focusing on more closely in recent months—even going so far as to impose short-term restrictions and conduct audits of individual providers. (StreamLex)

4. 10 basic rules: Using LLMs in a privacy-friendly way (solo & in a team)

Whether you are a sole trader or a medium-sized IT company, the following rules are very helpful in practice for LLMs GDPR-compliant to use:

- No sensitive personal data in consumer accounts

Health data, special categories according to Art. 9 GDPR, confidential employee information, internal contracts, etc. have no place in freely accessible AI front ends. (Data protection HWR Berlin) - Pseudonymize or anonymize wherever possible

Instead of „John Doe, IBAN, Project XY for Customer Z,“ it is preferable to use „Customer A, Budget B, Project in the field of mechanical engineering, Export country D.“. - Clear tool strategy: separate private and professional use

No „I'll just use my private ChatGPT account.“ Define approved tools—and if in doubt, block problematic domains on the company proxy. (NRW state database) - Create a company-specific policy („AI Policy“)

Short, understandable, practical: Which tools are permitted? Which data can be included? Who is the contact person for questions? An AI policy is no longer a „nice to have,“ but a central element of AI governance. - Clarify the legal basis

Within the company, you will often rely on legitimate interests (Art. 6(1)(f) GDPR), contract fulfillment, or, where applicable, consent. It is important to maintain clear documentation in the List of processing activities. (data protection conference) - Check DSFA – especially for sensitive scenarios

When AI systems significantly interfere with business processes or contain profiling elements, a data protection impact assessment often mandatory. (data protection conference) - Log and make traceable

Who uses which system for what? Logging is not only an IT security issue, but also a governance issue—and fits well with the documentation-oriented logic of the AI Act. (EUR-Lex) - Choose models and providers carefully

Check: Hosting (EU/EEA?), AV contract, storage and training policy, transparency, technical security features. Some providers now explicitly advertise „zero retention“ and „no training on customer data.“ASCOMP) - Training employees – also a legal requirement since February 2025

Short training sessions, live demos, small use case workshops – goal: understanding where opportunities lie and where red lines are drawn.

Since February 2025, the EU AI Regulation (EU AI Act) has explicitly required companies to ensure a sufficient level of AI literacy. Training, internal guidelines, and documented participation have thus become a mandatory part of AI compliance—comparable to data protection or information security training. - Integrate AI and data protection with your existing web and SEO strategy

Those who already use Technical SEO, performance, and structured data maintenance works, has a good basis for clean AI integrations. Are you familiar with my guide to technical SEO as well as my article on the Key SEO trends for 2024 – because visibility, trust, and legally compliant technologies are intertwined. (saskialund.de)

5. Why consumer accounts (free or basic accounts) from ChatGPT & Co. are tricky for companies

Even with improvements in data protection settings, the use of traditional consumer accounts remains problematic in many business contexts:

- Data flows to third countries and complex subprocessor chains,

- limited or absent order processing agreements,

- Unclear transparency for those affected,

- partial use of submissions for model training (depending on provider/tariff), even though many providers now offer „opt-out“ or business options. (eLaw24)

That doesn't mean you can't use such tools at all, but:

- In a business context they often require considerable coordination, contract review, and additional measures to ensure they are properly secured.

- Especially in a business context, it is therefore often worthwhile to take a step toward dedicated AI platforms, that are explicitly designed for GDPR-compliant use.

6. Data protection-compliant AI platforms – Focus on InnoGPT and Langdock

There are now platforms that offer various LLMs in an EU-hosted, GDPR-compliant environment bundle. Two of these are InnoGPT and long dock.

6.1 What sets InnoGPT apart?

Publicly available descriptions provide the following summary:

- InnoGPT bundles leading language models (e.g., GPT-4, GPT-5, Gemini, Claude, Mistral, etc.) in a platform specifically designed for German and European companies targets. (sysbus.eu)

- The platform focuses on Hosting in Europe and advertises with a contractually guaranteed „zero retention policy“, i.e., customer input is not used to train AI models and is processed exclusively on European servers – meaning that the input does not end up with the original third-country provider. (ASCOMP)

- It addresses typical business requirements such as Team functionalities, workflows, and integration into existing processes.

If you would like to take a closer look, please use the following link:

Get to know InnoGPT (affiliate link)

For companies that Replace shadow AI and at the same time want to provide their teams with modern tools, this approach is particularly exciting:

Your teams continue to work with powerful models—but in a controlled, documentable, and GDPR-compliant environment.

7. Practical examples: How SMEs and industry could use InnoGPT

Some scenarios I am familiar with from projects and discussions with customers:

- Technical Sales & Quotation Preparation

- Technical texts, product descriptions, and offers are prepared using InnoGPT.

- Internally used documents can be integrated using retrieval techniques without the company losing control over the data. (arXiv)

- Knowledge management & documentation

- Internal guidelines, manuals, and SOPs are made available for Q&A in a secure environment.

- Employees ask questions such as „What test steps apply to product line X?“ InnoGPT provides answers based on internal documents without passing them on to external training systems. (arXiv)

- Marketing & content for B2B websites and shops

- Content drafts for WooCommerce stores, product pages, and blog articles are created and then reviewed by experts.

- Because it is stored and processed in Europe, it can be integrated much more easily into an existing data protection and compliance strategy than the use of scattered consumer tools. (Capterra)

8. Governance & AI strategy: From individual tools to enterprise solutions

If you don't want to leave AI in your company to chance, you need more than just a tool:

- inventory

- Who is already using which AI tools and for what purposes?

- Which data flows where?

- Define target vision

- Which use cases should be officially supported (e.g., text, code, research, meeting notes)?

- How does AI fit into your existing Digital & SEO strategy one?

- Consolidate the tool landscape

- Instead of five different AI services running in the background: a shared platform, e.g., InnoGPT, supplemented by clearly defined special tools.

- Establish guidelines and processes

- AI policy, AV contracts, directory of processing activities, training courses.

- AI policy, role model, escalation and approval processes.

- Monitoring and continuous adjustment

- AI Act implementation, new guidelines from supervisory authorities, technical developments—governance is not a one-time project, but an ongoing process. (AKEurope)

Why this is more than just „best practice“:

The AI Act requires – gradually over 2025–2027 – a documented governance system for companies that use AI. Without an internally anchored AI governance structure (guidelines, training, monitoring), it will be very difficult in the medium term to prove to supervisory authorities and business partners that AI is being used in a controlled, responsible, and compliant manner.

9. Checklist: Making AI in your company GDPR- and AI Act-ready

A brief checklist, that you can start using today:

- take inventory – Where is AI already being used in the company (tools, data types, processes)?

- Perform risk assessment – Which operations are non-critical, and which involve sensitive data or core processes?

- Review legal bases and contracts – GDPR, AV contracts, data flows, third-country transfers.

- Define approved platform – e.g. InnoGPT as a central, GDPR-compliant AI solution for teams

- Adopt AI policy – understandable, practical, with examples and dos and don'ts.

- Training & Enablement – Empower employees to use AI in a targeted, responsible, and efficient manner – and document this training (AI literacy).

- Documentation & Monitoring – Take the logic of the AI Act and the GDPR seriously: document, evaluate, refine. (EUR-Lex)

Sources

- Data Protection Conference (DSK), Guidance on „AI and Data Protection“ (as of May 6, 2024) – Criteria for selecting and using AI applications in companies and public authorities. (data protection conference)

- European Data Protection Board (EDPB), „Report of the work undertaken by the ChatGPT Taskforce“ (May 24, 2024) – First coordinated European assessment of ChatGPT's data processing practices in light of the GDPR. (EDPB)

- Information sheet „Use of generative AI and data protection“ (University / Data Protection Officer, as of 04/2024) – Practical guide to using generative AI, especially ChatGPT, from a data protection perspective. (Data protection HWR Berlin)

- eRecht24, „Is ChatGPT usable in compliance with data protection regulations?“ (2025) – Classification for data protection-friendly use of ChatGPT, including information on training use and settings. (eLaw24)

- Regulation (EU) 2024/1689 – Artificial Intelligence Act (AI Act) – Official EU legal framework for AI, including a risk-based approach, governance obligations, and application timeline. (EUR-Lex)

- EU AI Act Service Desk & FPF Timeline – Overview of the phased implementation of the AI Act until 2026/2027. (AI Act Service Desk)

- Reuters & Le Monde (2025), reports on proposals by the European Commission to extend the timeline for high-risk obligations under the AI Act – Indications of planned delays to certain regulations until 2027. (Reuters)

- Handelsblatt Live, „AI and data protection: How to use AI systems in compliance with the GDPR“ (2025) – Classification of the interaction between the GDPR, BDSG, and AI Act in a corporate context. (Handelsblatt Live)

- ASCOMP, sysbus.eu, Capterra – Information about InnoGPT as a GDPR-compliant AI platform with EU hosting and a zero-retention approach. (ASCOMP, sysbus.eu, Capterra)

- arXiv – Technical articles on retrieval-based AI applications and knowledge management scenarios in a corporate context. (arXiv)

- AKEuropa – Analyses and background reports on the practical implementation of the AI Act in Europe. (AKEuropa)

Note: This article provides technical guidance on the use of AI systems in compliance with the GDPR and AI Act. It does not replace legal advice. For binding assessments, you should consult legal experts.

0 Comments